A low-latency network connection experiences small delay times while a high-latency connection experiences long delays. The thing with latency is that there are no standards that govern what is high and what is low What we think of when we say low latency is usually low when compared with the average in that field of broadcasting Online video streaming has a wide latency range with higher values resting between 30 and 60 seconds.

Network Latency Definition Causes Measure And Resolve Issues

Network Latency Definition Causes Measure And Resolve Issues

The NVIDIA low latency mode is a new feature presented by NVIDIAs graphics driver intended for competitive gamers and anyone else who wants the fastest input answer in their games.

Low latency definition. Those problems can be magnified for cloud service communications which can be especially prone to latency. Cloud service latency is the delay between a client request and a cloud service providers response. In practical terms latency is the time between a user action and the response from the website or application to this action for instance the delay between when a user clicks a link to a webpage and when the browser displays that webpage.

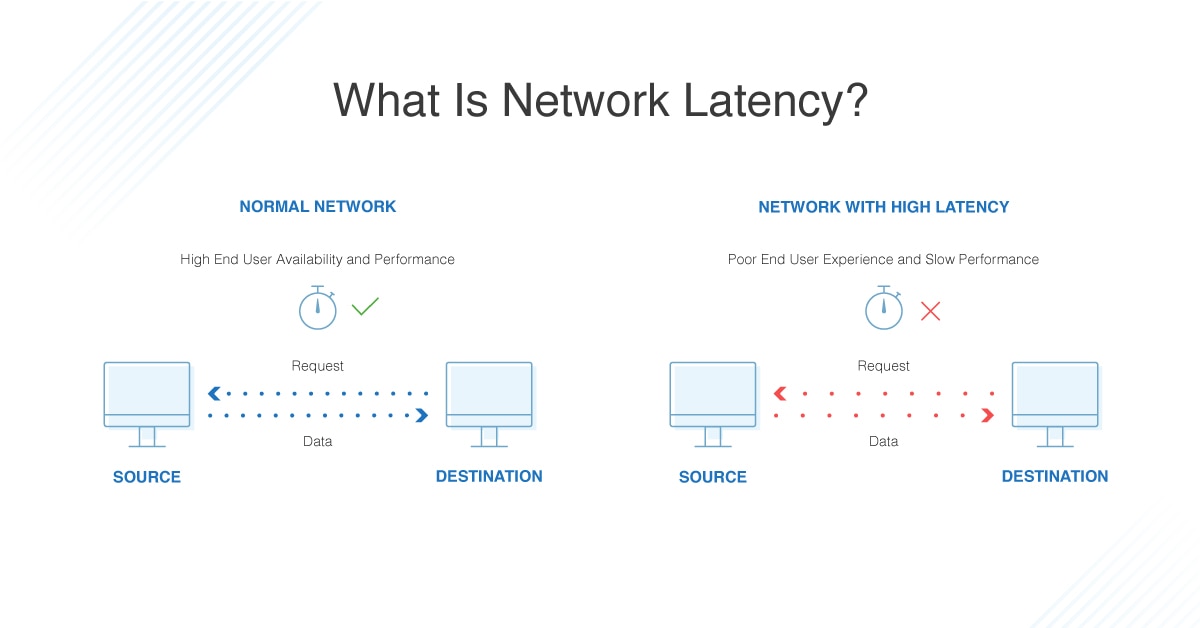

That said its a subjective term. Network connections in which small delays occur are called low-latency networks whereas network connections which suffers from long delays are called high-latency networks. Low latency describes a computer network that is optimized to process a very high volume of data messages with minimal delay latency.

Low latency allows human-unnoticeable delays between an input being processed and the corresponding output providing real time characteristics. Low Latency modes have the most impact when your game is GPU bound and framerates are between 60 and 100 FPS enabling you to get the responsiveness of high-framerate gaming without having to decrease graphical fidelity. The NVIDIA low latency mode feature would be open for all NVIDIA GeForce GPUs in the NVIDIA Control Panel.

When it comes to streaming low latency describes a glass-to-glass delay of five seconds or less. Ultra low latency describes a computer network that is optimized to process a very high volume of data packets with an extraordinarily low tolerance for delay latency. High latency creates bottlenecks in any network communication.

Network latency is the term used to indicate any kind of delay that happens in data communication over a network. In computer networking latency is an expression of how much time it takes for a data packet to travel from one designated point to another. These networks are designed to support real-time access and response to rapidly changing data.

A voice call on a high latency network results in time lag between the speakers even if there is enough bandwidth. Latency and throughput are the two most fundamental measures of network performance. This can be especially important for internet connections utilizing services such as Trading online gaming and VOIP.

The fact of being present but needing particular conditions to become active obvious or. Disk latency is why reading or writing large numbers of files is typically much slower than reading or writing a single contiguous file. Latency greatly affects how usable and enjoyable devices and communications are.

A low latency indicates a high network efficiency. They are closely related but whereas latency measures the amount of time between the start of an action and its completion throughput is the total number of such actions that occur in a given amount of time. Low latency networks are important for streaming services.

What is NVIDIA Low Latency Mode How to enable it. Other types of latency. Since SSDs do not rotate like traditional HDDs they have much lower latency.

Voice streaming needs very low bandwidth 4 kbps for telephone quality AFAIR but needs the packets to arrive fast. These networks are designed to support operations that require near real-time access to rapidly changing data. The term latency refers to any of several kinds of delays typically incurred in the processing of network data.

In telecommunications low latency is associated with a positive user experience UX while high latency is associated with poor UX. Latency is a synonym for delay. Other applications where latency is important.

Network latency refers specifically to delays that take place within a network or on the Internet. The popular Apple HLS streaming protocol defaults to approximately 30 seconds of latency more on this below while traditional cable and satellite broadcasts are viewed with about a five-second delay behind the live event.